So my self-hosted CMS host got compromised. So I got stuck without the means to publish, edit, or create any articles in a long time. It wasn't from lack of ideas or things I wanted to write about. But getting it back online was about last on my priority list with everything going on in the world and in my personal life. Though thanks to using a static generator I could just leave it down.

But this past week I decided to look into it and I figured I'd share a couple of thoughts as I went through my recovery process.

Digital Ocean Notification

So this whole incident started when around late 2021, I got an email from Digital Ocean saying that my VPS that hosted my CMS had been compromised and found to be partaking in a botnet and they had gone ahead and disconnected it from the internet. If I didn't have my site on a static site generator, I'd be horrified and scrambling. Luckily I was able to go get some sleep, and on the next weekend day I was able to connect to:

- Use the web console to dump the database from the MariaDB instance.

- Zip up the files that needed to be archived on that server.

- Create a storage instance on Digital Ocean and copy those files to it.

- Then mount the storage on a new connected VM and use it to transfer over the files over rsync over to my NAS.

And then for a year, it's just sat there on my NAS.

Though before I tore down that old VM, I wanted to flex those security muscles and try investigating why my server was flagged for a DDoS source. So here's just a couple of the investigation tactics I went through:

- Investigated key areas of the file system, including all the folders that would typically be in path, and any user folders that might be present. Using

treeand some text difference tools I could at least compare against a stock Ubuntu 18.04 server to see if there was nasty hidden in any important folders - As part of investigating the file systems, I investigated any possible new systemd services that may have been installed, but I couldn't find anything in logs or in

/etc/systemd. - Also there were no weird processes in

htopor any weird connections inss.

So after digging through all of this, I went back to thinking about what my server is running. It was running Drupal, duh. But it was also running a private GitLab instance that was used for a abandoned project by a team I used to work with, which made updating it low priority for me at the time. But obviously that's not true at all. Everything is an ingress point. And it just so happens that there was a CVE published basically days apart from my incident.

Lesson learned the hard way: Monitor and update your software, or restrict it from the public.

Updating the CMS to Drupal 9.

So before I re-setup any software stack, I needed to get my CMS off of Drupal 8 so I can continue getting security updates on Drupal 9. And to my surprise updating to Drupal 9 was legitimately easy. This may be because the layout of the site isn't managed by Drupal, but tools like Upgrade Status really made finding what composer dependencies needed updating an absolute breeze. And with composer managed Drupal now being fully mature, I was able to remove a lot of third-party packages, for more modern alternatives that are from the Drupal team themselves.

I figured this would be easy, but I had no clue that it would be this easy. Probably took like 2 hours. Which, again, is probably thanks to how small my site is but that's still very nice to not have a headache over a major software framework upgrade.

Spinning Up a New Server Containers... A Year Later

Since this server went down, I've long moved all of my personal infrastructure to containers. Which means that it was time to containerize the LNMP stack that runs this CMS.

I went with a 2 container system deployed via a Portainer, which is my favorite way to deal with homelab infrastructure. And yes I said homelab. I chose to host my CMS locally at home. Considering what I’ve been talking about a security it’s fair to ask why would I personally introduced a source of ingress into my own household. Well here’s the trick, in my nginx configuration for that site I have the following:

allow 192.168.1.0/24

deny all;

That's right, if I'm not deploying to Netlify, the CMS container is closed off from the world. And the MariaDB container is only on a network with the CMS container. Even if someone compromises the container, I just revert back to a different snapshot.

I know that no matter how much process I put into place a personal project isn’t going to be able to be on the same level as job. Sometimes you just want to be social on a weekend and not run software updates all day. I’ll trade the minor inconvenience of being on my home network when it comes time to write content rather than always needing to stay alert for a fun side project.

Side tangent: I do still need to toggle the restriction when ready to publish. As of yet Netlify does not offer a reliable IP subnet(s) to deploy from, so when it comes time to build, I do need to change that temporarily. This could be improved though by going with a different host.

Build time

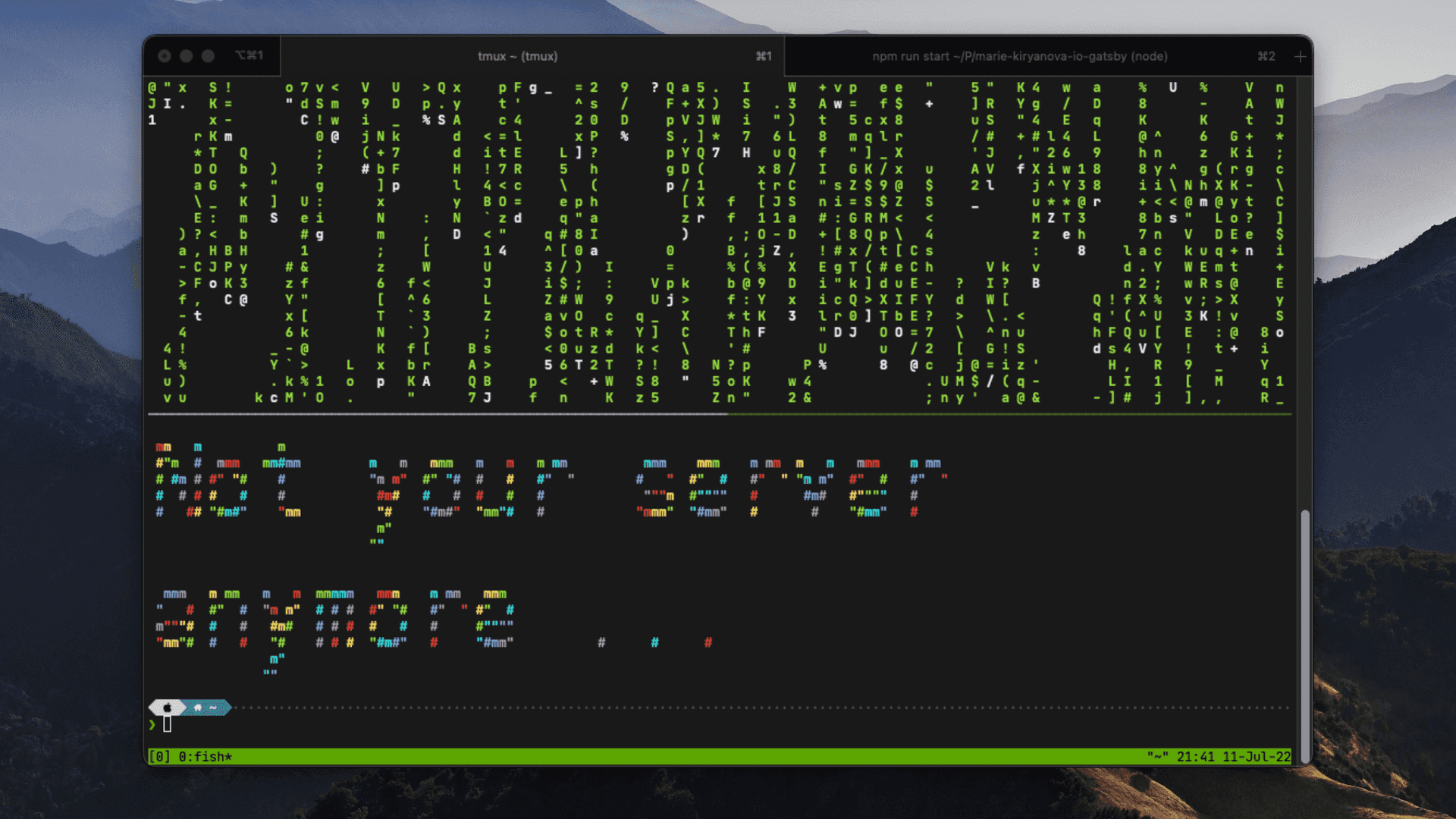

Certain node projects suffer from something I’ve dubbed “node-gyp deterioration”. Here I am on a shiny new M1 Max machine trying to build my project and craaaaaaash.

Turns out that running old dependencies that have linked C code probably isn’t compatible with the latest fancy platforms and architectures. While it wouldn’t be hard to just use a container of a platform that could build the site I wanted to take the time to update my Gatsby version from 2 to 4.

Again, this was pleasantly easy, most of the packages updated without a single code change needed. All except gatsby-image. Gatsby Image was marked as deprecated in exchange for gatsby-plugin-image which has a pretty different API from the original Gatsby Image. Though a project wide search got all the instances of the Img component and GraphQL fragments swapped out pretty fast.

It wasn’t quite as easy as getting Drupal updated, but it wasn’t far behind impressively enough.

And Here We Are Again

If you’re reading this right now that means I was able to go through this plan and get everything working again. Ideally the site functions almost identically to how it did before. Only now I can update it’s contents again.

After doing some bug fixes on my front-end, I did find a ton of things I wanted to update in the code based on what I’ve learned over the past two years maintaining a component library professionally, but that’s content fodder for another day.

For now I hope you take away at least a couple of these key things:

- Don’t host more than one public service on a server at a time if you’re not ready to be the DevOps team that keeps everything updated. There’s a reason that PaaS systems are so popular, no one wants to be responsible for patching literally everything.

- If you’re running a statically generated site, only your editors and build bot needs access to the CMS and nothing else. Why even give attackers a chance if you don’t have to?

- Updating dependencies while annoying has gotten easier over the years, it’s worth at least trying to keep them up date when you have the time.