As a technology enthusiast, few things really are as exciting seeing computer and networking get faster. When I moved into my new apartment, I was pleasantly surprised that Verizon offered a fully symmetrical gigabit connection to our location. While I've had gigabit download speeds at my previous apartment, having upload speeds above 30Mbit was something I've never had the pleasure of having thanks to Comcast in Cambridge (why there is no competition in Cambridge, a large tech center, baffles me, but that's outside of the post's topic).

So after we move in, I setup my desktop, routed my Cat6 cable to my room, and plug it into my desktop. And I see that beautiful 900/900 result on speedtest.net. But just like all synthetic testing, this was a lie. One source can max out the connection, sure. But quickly we found out that if any resident was uploading anything significant our download speed would go to a hault. Absolutely stops. 1Mbit.

We never experienced this issue because our last internet connection was capped at a measly 40Mbit upload on a good day, so we'd never run into that edge case. We tried everything, resetting the router to factory, installing custom firmware, playing with QoS settings, and just capping speeds. Nothing really worked. The router died almost on a daily basis. The final diagnosis was simply: it was consumer hardware.

Not to take a dump on consumer hardware, but they are targeted at people not like my roommates and I. No matter how nice the consumer hardware is, 4 power users/developers are going to destroy that router, and the upgraded connection was letting this happen.

The Build

And so we set out to build a proper server. And the first question was: How many servers do we need? At this point we only had a basic NAS using an old Core 2 Quad (from 2007!), we didn't really own any real server hardware, but we knew we'd use it if we did. With that in mind the solution was the buzzword that everyone uses, virtualization.

So I set out and picked some parts to put together a beefy server. At the heart of the machine, I picked out a 10-core 20-thread Engineering Sample Xeon to fit into a spare X99 board I already had. Took 32GB of RAM from my workstation PC that had 64GB in it already. Purchased the cheapest graphics card ever to get some kind of output to the console. And purchased a basic Rosewill 4U case off of Amazon. Mix in a Corsair PSU I already had and a CPU cooler, and the build was complete! It wasn't the best, but it was definitely an upgrade from our Core 2 Quad server.

Virtualization

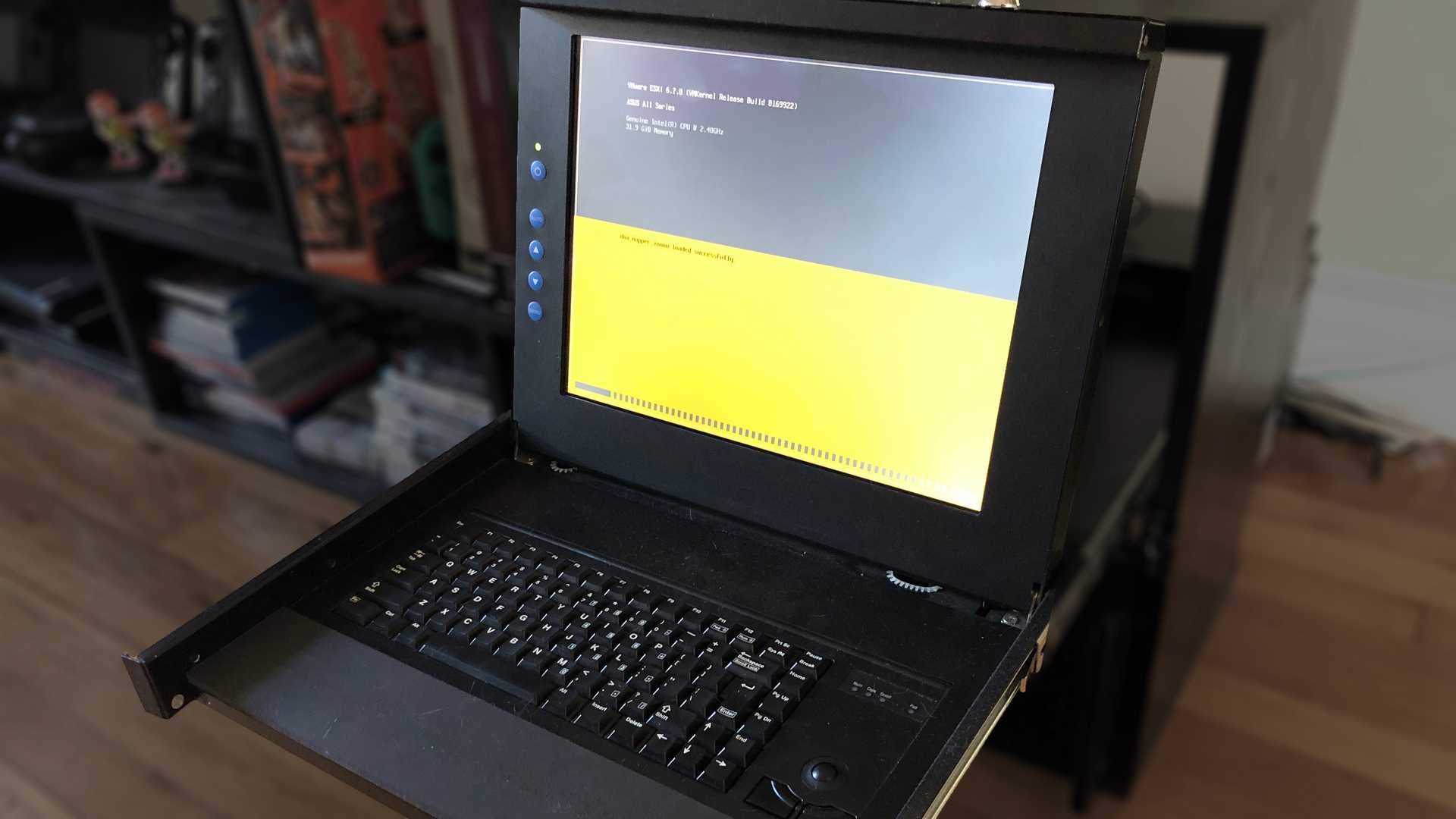

I played with a number of hypervisor options available like FreeNAS (which we were already using), unRAID, Custom Linux solution, but ended up settling on ESXi. The amount of documentation and support is immense for that platform, and the free license has little restrictions for a hobbyist (most important of which is the number of vCPUs on a single VM can only max out at 8, however you can still run 2 VMs that exceed 8 total vCPUs).

We settled on the following VMs:

- PFSense - Routing and Firewall

- FreeNAS - NAS Services

- Debian - Misc Application Server

This layout allowed us flexibility and separation between our services. There are plenty of better tutorials else where on how to set these services up, so I won't go into detail as to how to configure it. But the end results do show.

Results

Our internet has only gone down once since this has been setup, and that was when Verizon was experiencing issues. I've been very satisfied with the setup of a single quiet server that does everything we need. I was able to move all our HDDs into the new servers and retire the old Core 2 Quad build and pass it on to a friend as their first desktop to tinker with and learn.

And as a side note, a Ubiquiti AC Pro access point replaced our WiFi access point, and it's been perfectly stable as well.

Improvements

- 10Gb - This has been in progress slowly, I have a single 10Gb fiber client already (my desktop) that's setup to just plug into a 10Gb card in the server. This is on it's own subnet and probably suffers from some overhead, the fastest I've been able to get out of a test has been 6Gb. A proper switch will be needed, and the server will probably need a 40Gb uplink.

- More hardware - I know I just praised this for being a compact piece of tech that does everything I need it to do, but a proper rack would be fun to build. The Rosewill case is frankly atrocious for swapping drives, and the lack of a backplane makes the drive cables look like a crime happened there.